Shiv Shankar

Graduate Student

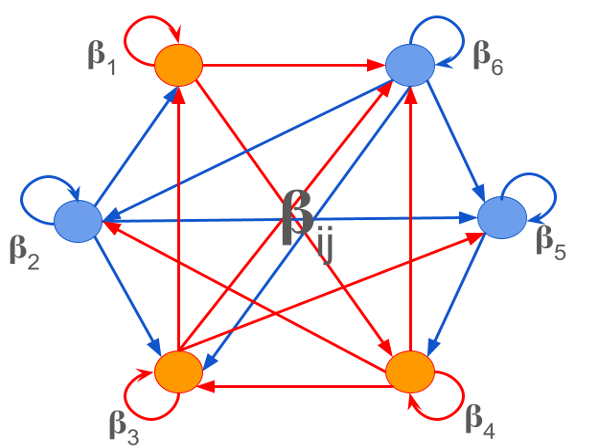

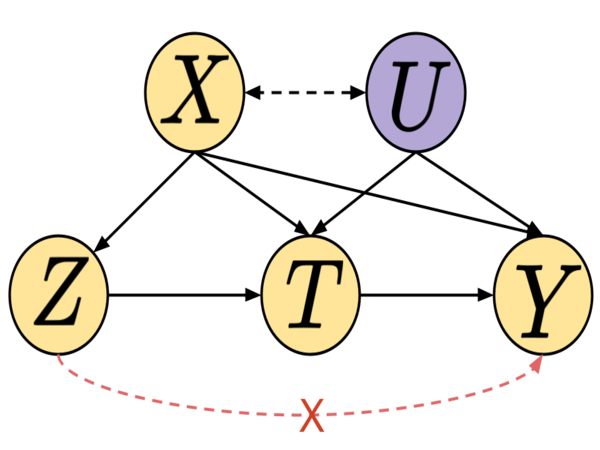

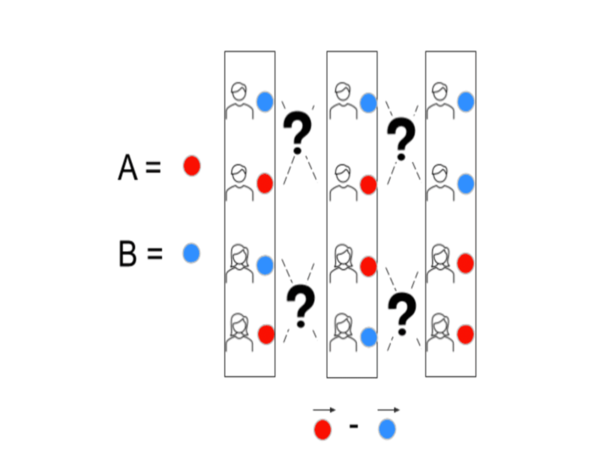

My research is centered around developing tools for reasoning and estimation for real-world applications. To this end I mostly work on machine learning and probabilistic models. I am also interested in grounding this research in real-world applications in sustainability and health-care.

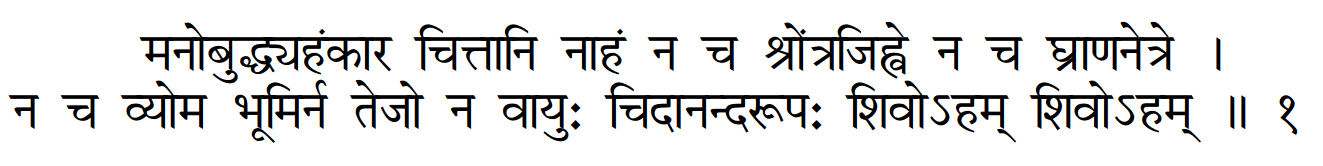

I AM

not mind, intelligence, ego ;

not in

ear, tongue, nose, eye ;

not sky, earth, wind, fire.

form of ETERNAL BLISS