Training computers to generate 3D shapes

Figure 1: User interface for creating and exploring virtual creatures by specifying semantic attributes reflecting high-level design intent.

Digital three-dimensional (3D) content is becoming ubiquitous. 3D printers, augmented reality applications, collaborative virtual environments, physics simulation techniques, and computer-aided design rely on the availability of digital content in the form of 3D shapes and scenes. Unfortunately, creating digital representations of shapes and scenes is largely out of reach for users without extensive experience and training on modeling tools, such as 3DS Max, Maya, ZBrush, Blender, and so on. Even for professional modelers and digital artists, creating a compelling and highly-detailed shape can take several hours or days. The reason is that existing modeling tools require users to interactively specify a series of low-level laborious selection and editing commands; e.g., create and manipulate individual 3D curves, points, or patches.

Assistant Professor Vangelis Kalogerakis' research deals with the development of computer algorithms that generate 3D shapes given high-level design goals and specifications provided by the users. Instead of painstaking low-level commands, the user input to these algorithms are linguistic attributes related to the desired shape category, geometry, and function. Users can interactively specify these attributes with interfaces that also enable exploration of design alternatives (see Figure 1 - More details can be found in "AttribIt: Content Creation with Semantic Attributes, Chaudhuri S., Kalogerakis E., Giguere S., Funkhouser T., Proceedings of ACM UIST 2013").

"How do these algorithms generate 3D shapes?" Kalogerakis answered that the core of these algorithms is machine learning, especially deep learning. The algorithms access large, online repositories of 3D models and learn statistical patterns and relationships between shape parts, patches and feature points. For example, consider chairs. The position and geometry of the legs in a chair are strongly correlated to the position and geometry of the seat, back and armrests, as well as the overall chair style and functionality. These correlations are hierarchically captured with latent variables in probabilistic models trained on the input repositories. Then the algorithm samples the probabilistic models to generate new shapes automatically, or perform statistical inference to generate the most likely shapes given high-level user specifications.

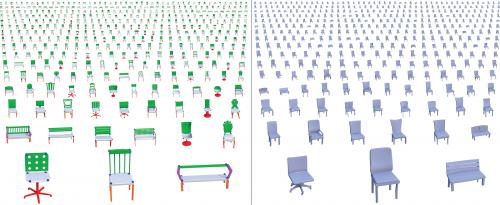

Figure 2 illustrates an example of input and output of the latest, deep learning algorithm developed by Kalogerakis and collaborators. On the left, an input collection of chairs, downloaded from an online repository, is shown. First, the algorithm segments the input shapes into semantic parts (backs, legs, armrests and so on), continues estimating feature point correspondences (shown as blue spheres), and finally learns the probabilistic model based on these processed shapes. Segmentation and correspondences are performed with no or minimal human supervision; e.g., the user provides an exemplar segmentation of a four-legged chair, an office chair and a bench. On the right, Figure 2 demonstrates chairs automatically generated by sampling the learned probabilistic model. The user can also interactively browse the generated shapes or parts with linguistic attributes using exploratory interfaces, such as the one shown in Figure 1. The relationships of shapes and their parts with linguistic attributes are also learned from online repositories with sparsely labeled data.

Figure 2: (Left) Input repository of chairs. Chairs are segmented into semantic parts and feature point correspondences (blue spheres) are estimated across them. (Right) New chairs are synthesized by sampling a probabilistic model trained on the input repository based on deep learning techniques.

"Can the generated shapes be readily used in the physical world or in a virtual environment?" Kalogerakis replied that the generated shapes can be used to populate a virtual world, however, they need further processing to be used in the physical world. The reason is that currently the synthesis algorithm is ignorant of physics and materials. "Nobody guarantees that if you print the chair or its parts with a 3D printer and then sit on it, it will not break!," Kalogerakis said. Ongoing research in the graphics lab involves the development of algorithms that optimize the geometry of a shape and connections between its different parts to ensure its functionality.

There are several other exciting research directions related to automatic shape synthesis. The current algorithms are limited to generate relatively small-scale objects, such as furniture, toys and tools. Generating large-scale shapes, such as vehicles or buildings, require algorithms that understand complex part arrangements, symmetries and topology variations. Functionality is also significantly more complicated in these shapes, since it involves dynamic movement and interactions of their parts. Another interesting future direction is to develop interfaces that allow users to specify attributes related to design styles for controlling shape synthesis. For example, users could specify high-level attributes for buildings, such as "gothic" or "baroque" architectural style. Kalogerakis said that developing algorithms that classify objects into temporal and geographic styles in a similar manner to humans is particularly challenging, since it requires discovering non-trivial patterns across shapes that are largely different in structure. Kalogerakis is currently investigating these research problems together with other members in the computer vision and graphics lab as well as external collaborators. His research is supported by an NSF grant and start-up funds provided by UMass Amherst CS.