Student-led Voices of Data Science Encourages Critical Thinking about Responsible AI

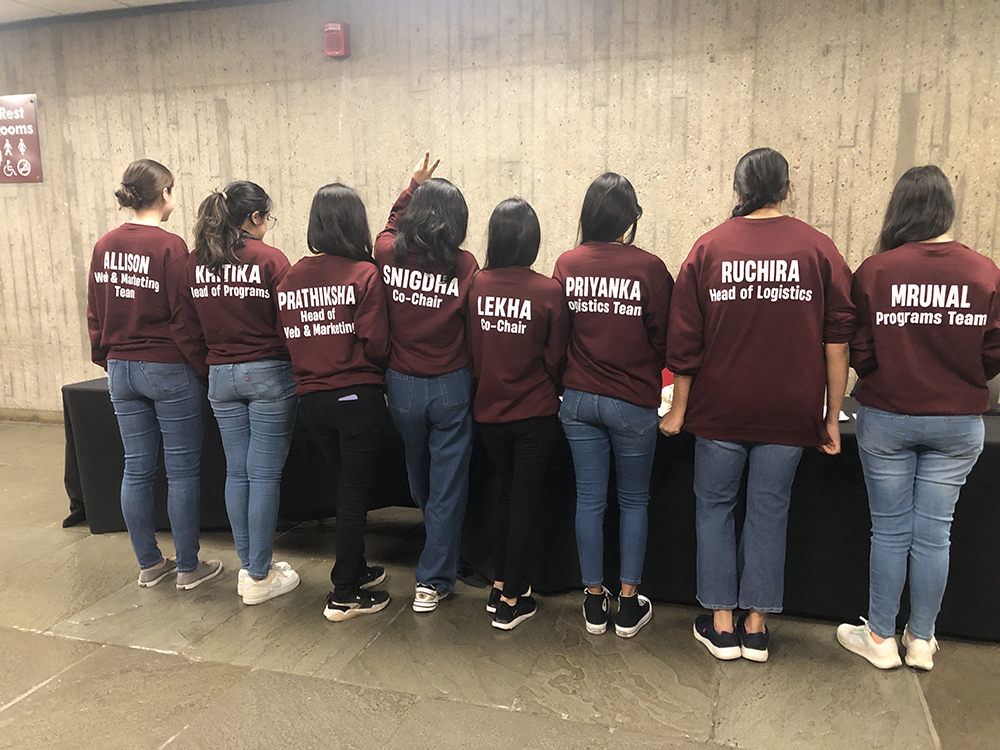

Voices of Data Science organizing team, from left: Ruchira Sharma, Kritika Kapoor, Prathiksha Rumale V, Mrunal Kurhade, Priyanka V Devoor, Lekha Sharma, Snigdha, and Allison Poh

“Take more philosophy courses.” This was the advice from Yann Stoneman, solutions architect at Amazon Web Services, on March 8 to attendees at the 2024 Voices of Data Science conference, a student-led event that aims to amplify the voices of data scientists and foster a robust, inclusive community of individuals who share a vision for utilizing data science ethically.

Stoneman’s talk, “Echo Chambers: Can Bias-Free AI Exist?” fit in well with this year’s event theme, “Responsible AI,” focusing on the dual challenges of mitigating AI bias— reducing bias and defining ethical AI. He offered three techniques for mitigating bias: data auditing (reviewing and analyzing data for bias), data augmentation (enhancing dataset diversity), and fine-tuning foundation models. Stoneman also urged collaboration with humanities experts when defining ethical AI and encouraged students to take more humanities courses, such as philosophy.

Earlier in the day, the event opened with a talk from Przemek Grabowicz, Manning College of Information and Computer Sciences (CICS) research assistant professor, who teaches Responsible Artificial Intelligence (COMPSCI 690F) and manages EQUATE, a CICS faculty initiative focused on research and education related to equitable algorithms and systems. Grabowicz asserted that “the way technology is used is much more important than the technology itself,” and provided three examples – health care inequities, bias in political social media polls, and generative models in a world where so-called “open” AI is, in fact, closed.

Another speaker, Kavana Venkatesh, data scientist and Women in Data Science ambassador, discussed the double-edged sword of open-source large language models (LLMs) in the quest for responsible AI. Open-source LLMs—those in which all aspects of the system are accessible by the public—offer transparency and collaboration. These models first emerged as a response to concerns around access and control of this powerful technology. However, they come with challenges such as data privacy and security, inadvertent biases, and vulnerability to attacks. Venkatesh suggested actions that can be taken to balance the benefits and risks by various groups such as the general public (advocating for privacy, engaging in ethical discussions, and educating and raising awareness), governments, and other agencies (policy engagement, accessibility, and inclusivity), and developers (collaborative development, responsible deployment, feedback loops).

Speaker DeiMarlon Scisney, AWS ML Hero and founder of HOP Technology Solutions, also spoke about the dual nature of AI – the potential for innovation and the possibility of exacerbating inequalities. Scisney described data privacy as a cornerstone of social justice and urged developers to consider the impact of technology on marginalized communities. He talked about the importance of participatory design, in which users are involved in creating the technologies they utilize) and including diverse voices to mitigate bias. Scisney closed with a call for an inclusive digital future where technologists, policymakers, and the public take collective responsibility for responsible AI.

The final speaker of the general session, Reshmi Ghosh, applied scientist at Microsoft, talked about safety in LLMs, which are known to be brittle, or easily broken, as evidenced by AI hallucinations such as creating unrealistic or misleading visuals. Ghosh explained that most LLMs have been trained on various types of data and do not have the ability to differentiate between inappropriate and quality content, resulting in the need for meticulous development and monitoring of AI systems to ensure they align with the values and expectations of users. Ghosh described an ongoing process to address prevention of harms that involves identifying potential harms, developing metrics, and monitoring systems to predict and quantify the risks while developing tools and strategies to mitigate harm and observing the effectiveness of the harm mitigation. She concluded by urging audience members to raise concerns often and foster a culture of inclusivity.

Aft er the general session concluded, attendees could opt to attend workshops, including one given by the Public Interest Technology (PIT) Club of PIT@UMass (Public Interest Technology Initiative). In the session “Navigating the World of Deepfakes,” to demonstrate how realistic deepfake images can be, attendees were asked to look at a series of pictures and guess whether each image was authentic or a deepfake.

er the general session concluded, attendees could opt to attend workshops, including one given by the Public Interest Technology (PIT) Club of PIT@UMass (Public Interest Technology Initiative). In the session “Navigating the World of Deepfakes,” to demonstrate how realistic deepfake images can be, attendees were asked to look at a series of pictures and guess whether each image was authentic or a deepfake.

Voices of Data Science is an entirely student-run and student-led event produced by Manning CICS Master’s and doctoral students. Students worked in teams since last fall, networking with potential speakers, soliciting sponsorships, and planning logistics for the annual day-long event. Those interested in helping to shape next year’s event can send an email to voicesofds [at] gmail.com.